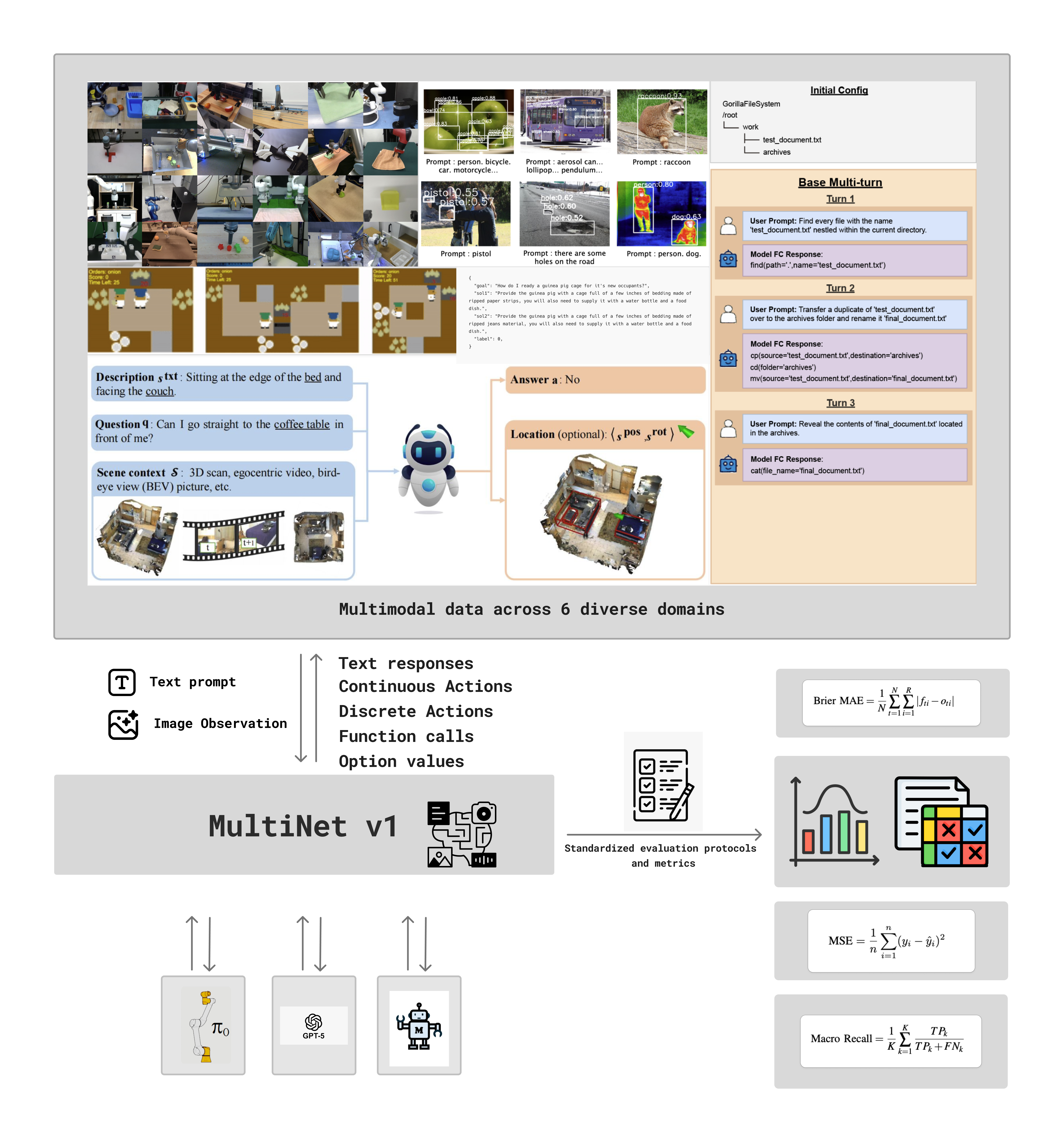

MultiNet v1.0: A Comprehensive Benchmark for Evaluating Multimodal Reasoning and Action Models Across Diverse Domains

MultiNet v1.0 provides a comprehensive benchmark suite for evaluating state-of-the-art multimodal reasoning and action models across diverse domains including robotics, gameplay, and multimodal understanding tasks.

(Hover over the image to enlarge)

Abstract

Multimodal reasoning and action models hold immense promise as general-purpose agents, yet the current evaluation landscape remains fragmented with domain-specific benchmarks that fail to capture true generalization capabilities. This critical gap prevents us from understanding where these sophisticated systems excel and where they fail. We introduce MultiNet v1.0, a unified benchmark suite that bridges this evaluation gap by systematically assessing state-of-the-art Vision-Language Models (VLMs), Vision-Language-Action (VLA) models, and generalist models across robotics, multi-agent gameplay, and multimodal reasoning tasks. Our evaluation suite spans 11 datasets and includes state-of-the-art models such as GPT-5, Pi0, and Magma in their respective categories.

Key contributions of MultiNet v1.0 include:

- Comprehensive Domain Coverage: Evaluation across robotics, gameplay, commonsense reasoning, spatial reasoning, visual question answering, and visual understanding tasks. These capabilities are essential for generalist models and systems.

- Standardized Evaluation Protocols: Unified metrics and evaluation procedures for fair comparison across different model architectures

- Model Adaptation Framework: Open-source code for adapting diverse models to various out-of-distribution task domains

- Extensive Analysis: In-depth analysis of model capabilities, failure modes, and architectural trade-offs

- Open-Source Toolkit: Complete evaluation harness and benchmarking tools for the research community

Dataset Coverage

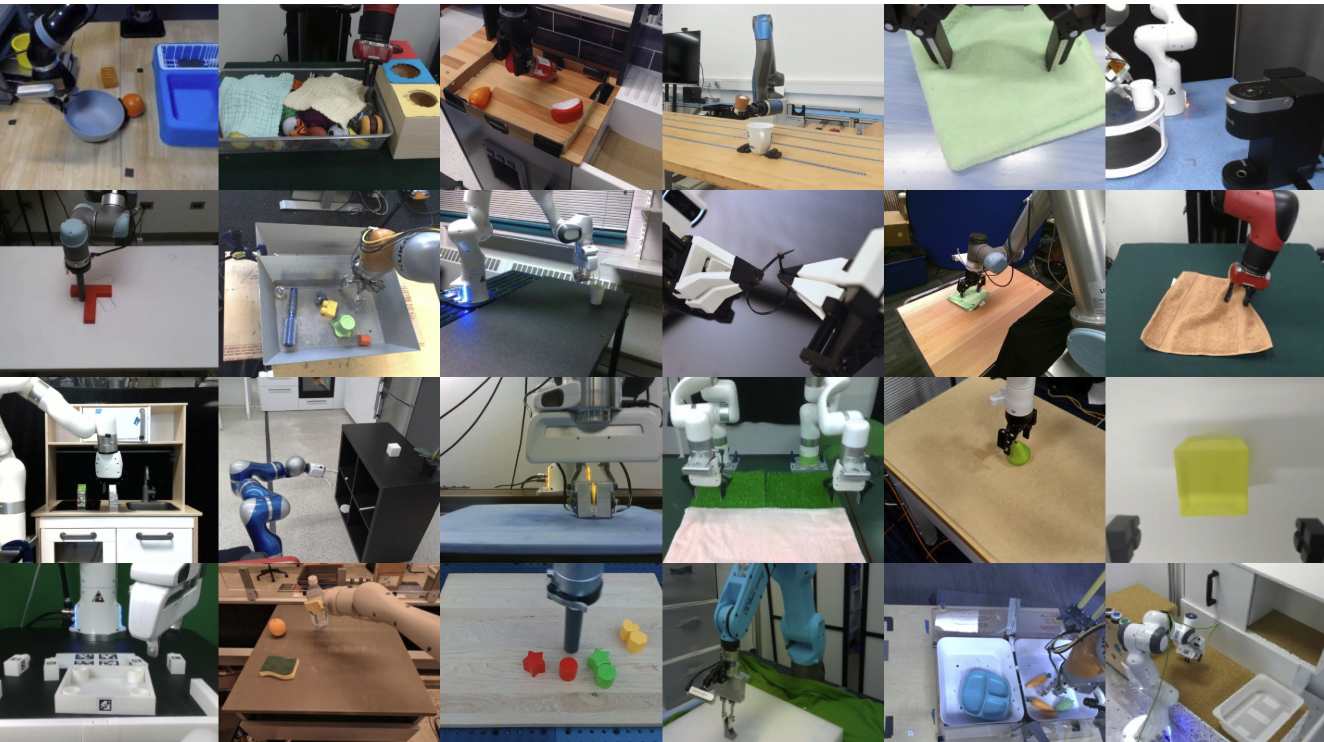

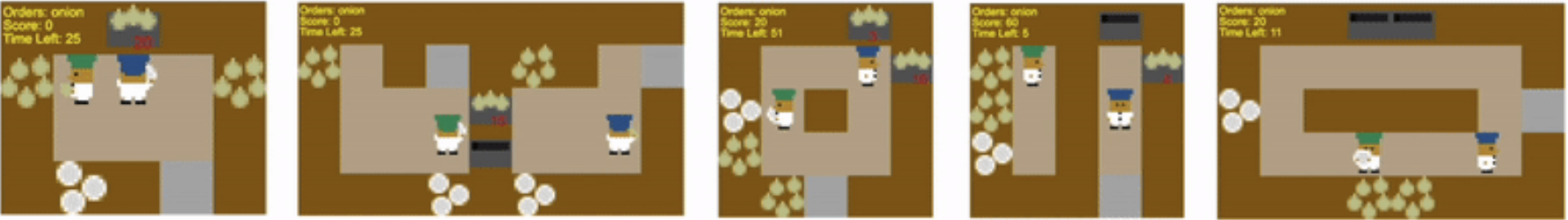

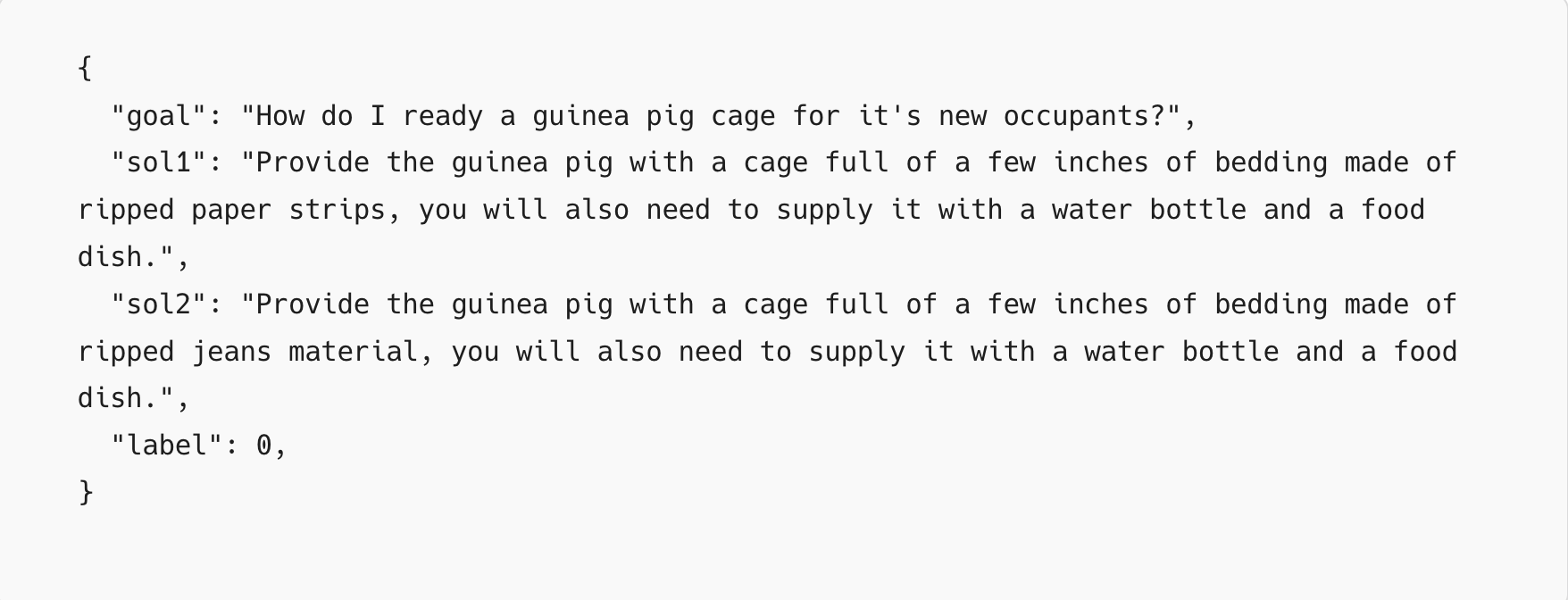

MultiNet v1.0 evaluates models across six major domains using 11 diverse datasets. Each dataset presents unique challenges in vision-language-action understanding, from robotic manipulation to complex reasoning tasks.

| OpenX | Overcooked | PIQA | ODINW | SQA3D | BFCL | |

|---|---|---|---|---|---|---|

| Dataset |  |

|

|

|

|

|

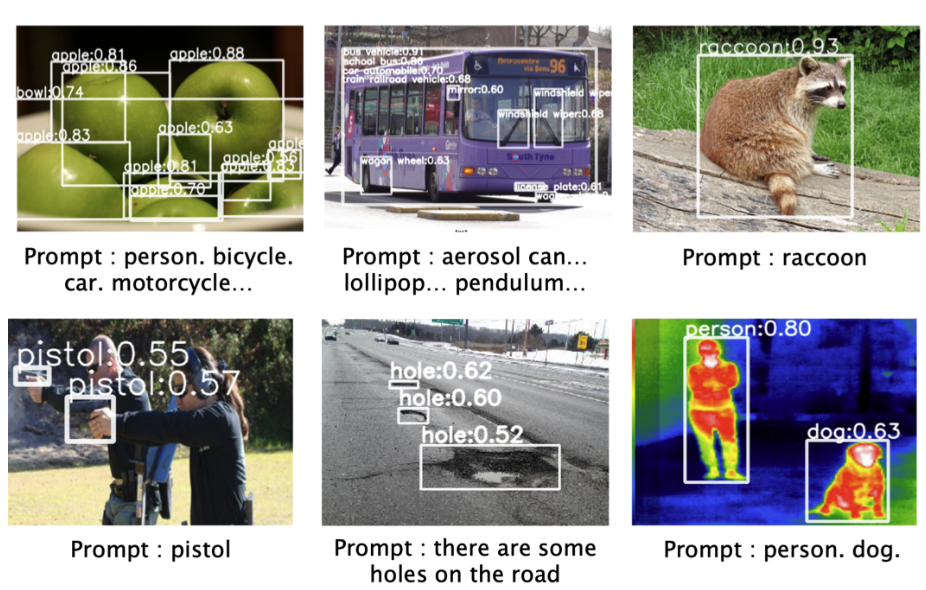

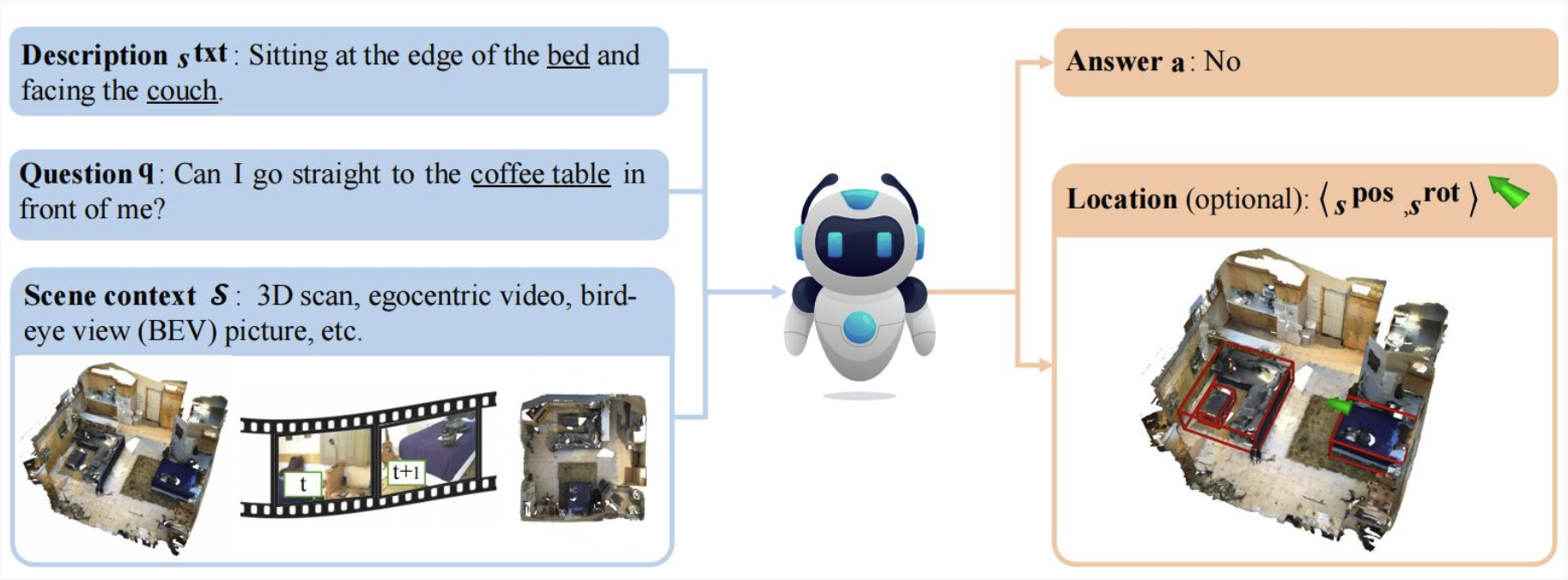

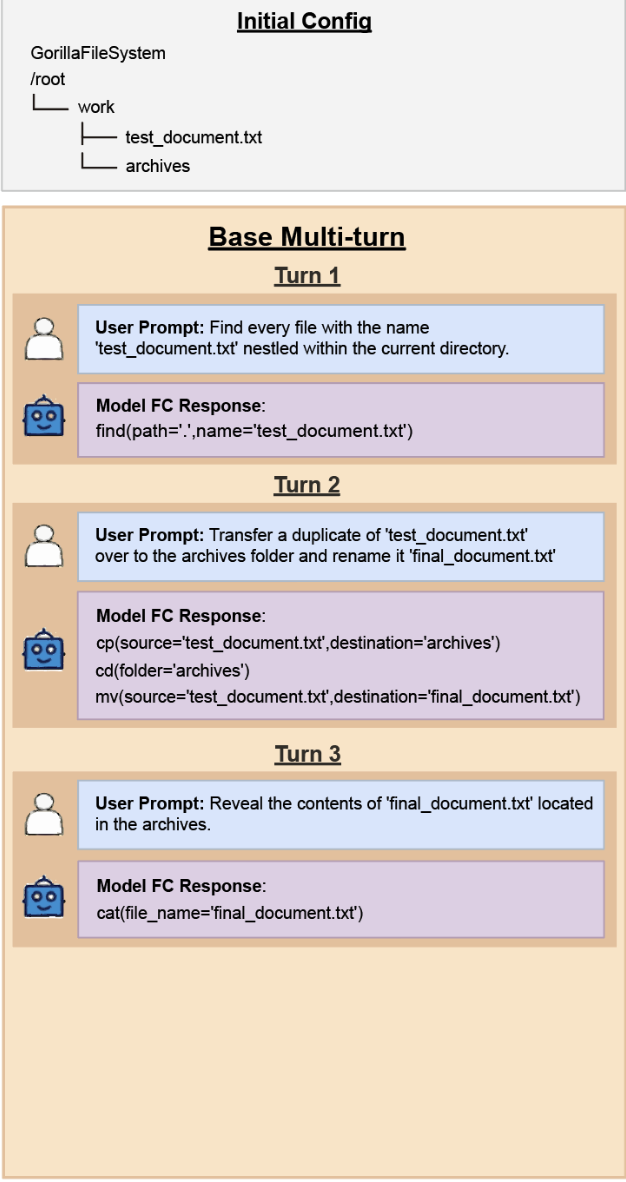

| Description | Large-scale robotics dataset with diverse manipulation and locomotion tasks across multiple robot embodiments and environments | Cooperative cooking simulation requiring coordination, planning, and multi-agent interaction in kitchen environments | Physical interaction question answering dataset testing common-sense reasoning about object properties and interactions | Object detection dataset with diverse domains testing visual recognition across varied contexts and object categories in the wild | 3D scene understanding dataset combining spatial reasoning with question answering in complex indoor environments | Berkeley function calling dataset for evaluating multi-turn conversational function calling capabilities |

| Domain | Robotics | Gameplay | Physical Commonsense Reasoning | Object detection | 3D Spatial Reasoning | Function Calling |

💡 Tip: Hover over images to zoom, or click to view full-size in lightbox

Evaluation Methodology and Metrics

MultiNet v1.0 employs standardized evaluation metrics tailored to each task category, ensuring comprehensive and fair assessment across diverse model architectures. Our evaluation framework adapts metrics to the unique characteristics of each domain while maintaining consistency for cross-domain comparisons:

Brier MAE

Mean Absolute Error of Brier scores.

Brier MAE

Brier Score:

1⁄N ∑t=1N ∑i=1R (fti - oti)2

fti: pred prob class i, time t

oti: ground truth

R: num classes, N: num timesteps.

Brier MAE is a variation of the original Brier score

which is a useful method to measure the accuracy of probabilistic predictions.

Normalized Brier MAE

Normalized version of Brier MAE.

Normalized Brier MAE

Description:

Average of Brier Absolute Errors that have been min-max normalized using the overall min and max Brier absolute errors across all timesteps.

Norm. Quantile Filtered Brier MAE

Normalized Brier MAE filtered by quantiles.

Norm. Quantile Filtered Brier MAE

Description:

Brier MAE values are filtered (only considering the error values that are within the 5th to 95th percentile), and then normalized based on the quantile filtered min/max errors.

Max Relative Brier MAE

Max relative error of Brier scores.

Max Relative Brier MAE

max(MAE) / median(MAE)

Quantifies how the worst-case error deviates from the typical (median) error for a given subdataset.

% Invalid Actions

Predictions outside the valid prediction space.

% Invalid Actions

(Invalid predictions / Total predictions) * 100

Percentage of predictions falling outside the valid prediction space of a subdataset.

Micro Precision

Precision of the model across all predictions.

Micro Precision

Micro Precision:

True Positives / (True Positives + False Positives)

Calculated globally across all predictions.

Micro Recall

Recall of the model across all predictions.

Micro Recall

Micro Recall:

True Positives / (True Positives + False Negatives)

Calculated globally across all predictions.

Micro F1 Score

Harmonic mean of Micro Precision and Recall.

Micro F1 Score

Micro F1:

2 * (Micro Prec * Micro Rec) / (Micro Prec + Micro Rec)

Balanced measure for global performance.

Macro Precision

Avg. of class-wise precision.

Macro Precision

Calculated by averaging class-wise precision scores across all classes. Treats all classes equally.

Macro Recall

Avg. of class-wise recall.

Macro Recall

Calculated by averaging class-wise recall scores across all classes. Important for imbalanced datasets.

Macro F1 Score

Avg. of class-wise F1 scores.

Macro F1 Score

Calculated by averaging class-wise F1 scores. Balanced macro-average performance.

Class-wise Precision

Precision for each class.

Class-wise Precision

Precision calculated individually for each class. Helps understand bias towards specific classes.

Class-wise Recall

Recall for each class.

Class-wise Recall

Recall calculated individually for each class. Shows per-class identification performance.

Class-wise F1 Score

F1 for each class.

Class-wise F1 Score

F1 score calculated individually for each class. Balanced per-class performance.

MSE

Mean Squared Error for continuous action prediction.

Action MSE

MSE = 1⁄N ∑t=1N ||apred - agt||2

Measures accuracy of continuous action predictions in robotics tasks. Lower values indicate better performance.

Action MAE

Mean Absolute Error for continuous action prediction.

Action MAE

MAE = 1⁄N ∑t=1N |apred - agt|

Measures average absolute deviation between predicted and ground truth actions. More robust to outliers than MSE.

Success Rate

Percentage of predictions that match exactly with the corresponding ground truth.

Success Rate

Success Rate = (Successful Predictions / Total Predictions) × 100%

Measures the percentage of predictions that match exactly with ground truth according to task-specific success criteria.

Normalized MSE

Normalized version of Mean Squared Error.

Normalized MSE

Description:

Average of Mean Squared Errors that have been min-max normalized using the overall min and max MSE values across all timesteps.

Normalized MAE

Normalized version of Mean Absolute Error.

Normalized MAE

Description:

Average of Mean Absolute Errors that have been min-max normalized using the overall min and max MAE values across all timesteps.

Norm. Quantile Filtered MAE

Normalized MAE filtered by quantiles.

Norm. Quantile Filtered MAE

Description:

MAE values are filtered (only considering the error values that are within the 5th to 95th percentile), and then normalized based on the quantile filtered min/max errors.

Max Relative MAE

Max relative error of MAE values.

Max Relative MAE

max(MAE) / median(MAE)

Quantifies how the worst-case MAE deviates from the typical (median) MAE for a given subdataset.

Cosine Similarity

Cosine similarity between predicted and ground truth text answer embeddings.

Cosine Similarity

cos(θ) = (A · B) / (||A|| ||B||)

Measures the cosine of the angle between predicted and ground truth text answer embedding vectors. Values range from -1 to 1, with 1 indicating perfect alignment.

Results

Our evaluation across diverse domains reveals significant insights into model performance and capabilities. Below we present detailed results from our evaluation suite.

Model Performance Comparison

Evaluation across 7 diverse tasks across robotics, digital control, and multimodal reasoning.

Task Groups:

Metrics:

Visual Indicators:

Notes:

Model Output Comparison

Compare how different models respond to the same visual input from the ODinW selfdrivingCar dataset

Input

What object is shown in this image from the selfdrivingCar dataset?

Option 0: biker Option 1: car ...

Output the number (0-10) of the correct option only.

Model

Output

Pi0's prediction space collapse visualized

Pi0 experiences prediction space collapse on the Overcooked dataset, centered around the actions 25 and 26, which maps to (Player 1: STAY, Player 2: SOUTH) and (Player 1: STAY, Player 2: EAST) respectively.

Frequency of predicted action classes for Pi0 model on Overcooked dataset

Key Findings and Analysis

1. Catastrophic Cross-Domain Failure: MultiNet v1.0 reveals catastrophic failure at the boundaries of vision-language-action models. No current model achieves true cross-domain generalization — Pi0 performance drops to 0% on basic vision-language tasks. GPT-5 while performing relatively better, still does not achieve anywhere near the performance necessary for succesful task completion.

2. Domain-Specific Fine-Tuning Corruption: Fine-tuning for robotics seems to systematically corrupt vision language models. Pi0 exhibits repetitive "increa" token spam, suggesting that action-oriented training degrades linguistic capabilities through catastrophic forgetting of language generation pathways.

3. Output Modality Misalignment: Magma, designed as a generalist model, produces spatial coordinates instead of text answers when prompted with language tasks. This reveals fundamental misalignment between input processing and output generation across different task domains.

4. Limited Impact of Prompt Engineering: Our prompt engineering experiments only led to marginal gains (~20% improvement) that cannot bridge fundamental architectural incompatibilities. This suggests that current model limitations are structural rather than interface-related.

5. Need for Architectural Innovation: These results demonstrate that current training paradigms create overspecialized models with incompatible domain-specific biases. This necessitates fundamental rethinking of modular architectures and progressive training strategies for truly unified multimodal systems.

Looking Forward

We are exploring several near-term experiments with collaborators, as well as larger-scale research directions that build on MultiNet's findings. If you're interested in contributing to the future of multimodal AI evaluation and development, we encourage you to get involved. Join our Discord community to connect with researchers and explore opportunities to contribute as part of the Manifold Research team.

Near Term Experiments

Building on MultiNet v1.0's findings of catastrophic failure at domain boundaries, our immediate research priorities focus on understanding and mitigating the fundamental limitations of current vision-language & action models. These investigations target the core mechanisms behind knowledge degradation, architectural incompatibilities, and the emergence of failure modes that prevent true cross-domain generalization.

Investigating the Gibberish Outputs of VLAs

- Token degradation analysis: Examine how fine-tuning on action sequences corrupts language generation pathways, particularly the emergence of repetitive tokens like Pi0's "increa" spam

- Mitigation strategies: Develop strategies to mitigate the degradation of language generation pathways despite end-to-end fine-tuning on entirely new data distributions

Investigating SoM/ToM Outputs of Magma

- Coordinate-text mapping errors: Investigate why Magma produces spatial coordinates instead of natural language responses when prompted with inputs of completely different domains, revealing fundamental misalignment in output modalities

- Output modality alignment: Develop fine-tuning/training techniques and architectural modifications to ensure proper alignment between input domains and output modalities, preventing cross-modal contamination in multi-task models

Pi0.5 - SoTA Knowledge-Insulated VLA Performance on v1.0

- Architectural improvements: Evaluate next-generation knowledge insulation techniques in Pi0.5 against MultiNet v1's comprehensive benchmark suite

- Domain transfer efficiency: Measure how effectively Pi0.5 maintains performance across vision-language tasks while adapting to robotic control

- Failure mode comparison: Contrast Pi0.5's failure patterns with previous Vision-Language-Action models to validate architectural advances in preventing catastrophic forgetting

Knowledge Insulation Testing and Experiments

- Modular architecture evaluation: Test compartmentalized model designs that isolate domain-specific knowledge while maintaining shared representations for common reasoning tasks

- Progressive fine-tuning protocols: Investigate learning approaches that gradually introduce new domains without corrupting existing capabilities

Long-term Research Directions

Our long-term vision extends beyond addressing current limitations to fundamentally reimagining how we evaluate, understand, and build multimodal action models. These goals represent paradigm shifts toward more robust, adaptive, and truly general AI systems that can seamlessly operate across diverse domains while maintaining coherent reasoning capabilities.

Live Benchmarks

- Dynamic evaluation frameworks: Develop continuously updating benchmarks that adapt to model capabilities, preventing overfitting to static test sets

- Real-time performance monitoring: Create systems for ongoing assessment of deployed VLAs across diverse real-world scenarios

- Community-driven evaluation: Build platforms for researchers to contribute new tasks and domains, ensuring benchmark relevance as the field evolves

World Models as Evaluators

- Causal reasoning assessment: Build and leverage world models to evaluate whether multimodal systems of the future understand causal relationships rather than just statistical correlations

- Counterfactual analysis: Deploy world models to test the robustness of multimodal systems through systematic perturbation of environmental conditions and task parameters

Building the Next Generation of Multimodal Action Models

- Unified architecture design: Develop foundational architectures that natively support multiple modalities without domain-specific fine-tuning degradation

- Compositional reasoning systems: Create models that can decompose complex tasks into modular components, enabling flexible recombination across domains

- Meta-learning for rapid adaptation: Build systems that can quickly acquire new capabilities while preserving existing knowledge, moving beyond current catastrophic forgetting limitations

Citation

@article{guruprasad2025multinet,

author = {Pranav Guruprasad and Sudipta Chowdhury and Harshvardhan Sikka and Mridul Sharma and Helen Lu and Sean Rivera and Aryan Khurana and Yangyue Wang},

title = {MultiNet v1.0: A Comprehensive Benchmark for Evaluating Multimodal Reasoning and Action Models Across Diverse Domains},

journal = {Manifold Research Publications},

year = {2025},

note = {https://multinet.ai/static/pages/Multinetv1.html},

doi = {10.5281/zenodo.17404313}

} MultiNet

MultiNet