MultiNet: A Generalist Benchmark for Multimodal Action models

Systems, Algorithms, and Research for evaluating Next Generation Action Models

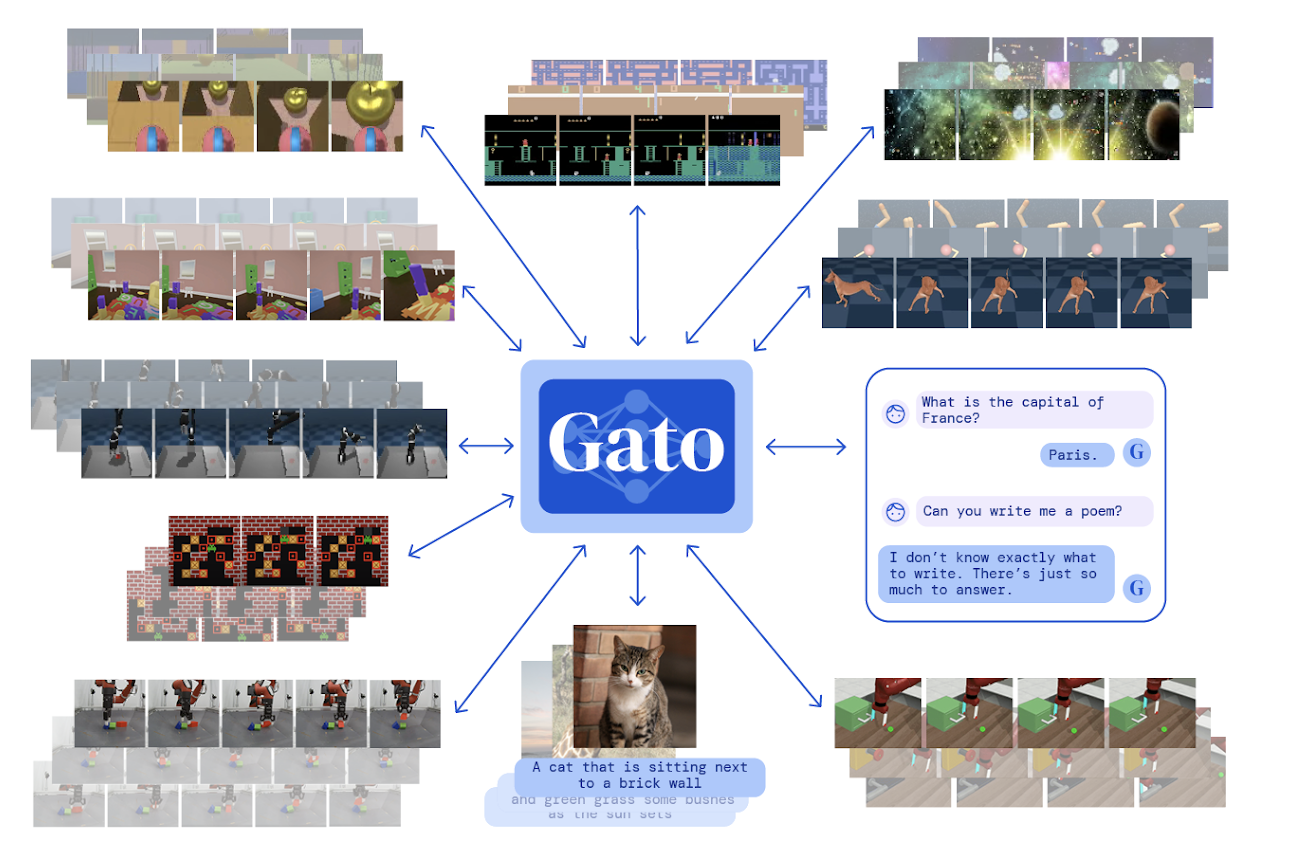

Multinet is a comprehensive benchmarking initiative for evaluating generalist models across vision, language, and action. It provides:

- Consolidation of diverse datasets and standardized protocols for assessing multimodal understanding

- Extensive training data (800M+ image-text pairs, 1.3T language tokens, 35TB+ control data)

- Varied evaluation tasks including captioning, VQA, robotics, game-playing, commonsense reasoning, and simulated locomotion/manipulation

- Open-source toolkit to standardize the process of obtaining and utilizing robotics/RL data

Explore our Research

News

Multinet v0.2 released! We systematically profile state-of-the-art VLAs and VLMs perform in procedurally generated OOD game environments. Read more about our recent release here.

Paper accepted at ICML 2025! Our paper detailing the Open-Source contributions of Multinet that benefit the AI community has been accepted at the CodeML Workshop at ICML 2025! Read our paper here.

Multinet v0.1 released! How well do state-of-the-art VLMs and VLAs perform on real-world robotics tasks? Read more on our release Page.

Introducing Multinet! A new generalist benchmark to evaluate Vision-Language & Action models. Learn more here.

Research Talks & Demos

Explore our collection of presentations showcasing Multinet's vision, progress, and development journey!

Citations

Multinet v0.2 - Benchmarking Vision, Language, & Action Models in Procedurally Generated, Open Ended Action Environments

@misc{guruprasad2025benchmarkingvisionlanguage,

title={Benchmarking Vision, Language, & Action Models in Procedurally Generated, Open Ended Action Environments},

author={Pranav Guruprasad and Yangyue Wang and Sudipta Chowdhury and Harshvardhan Sikka},

year={2025},

eprint={2505.05540},

archivePrefix={arXiv},

primaryClass={cs.CV},

url={https://arxiv.org/abs/2505.05540},

}

Multinet v0.1 - Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks

@misc{guruprasad2024benchmarkingvisionlanguage,

title={Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks},

author={Pranav Guruprasad and Harshvardhan Sikka and Jaewoo Song and Yangyue Wang and Paul Pu Liang},

year={2024},

eprint={2411.05821},

archivePrefix={arXiv},

primaryClass={cs.RO},

url={https://arxiv.org/abs/2411.05821},

}

Multinet Vision and Dataset specification

@misc{guruprasad2024benchmarking,

author={Guruprasad, Pranav and Sikka, Harshvardhan and Song, Jaewoo and Wang, Yangyue and Liang, Paul},

title={Benchmarking Vision, Language, & Action Models on Robotic Learning Tasks},

DOI={10.20944/preprints202411.0494.v1},

year={2024},

}

MultiNet

MultiNet